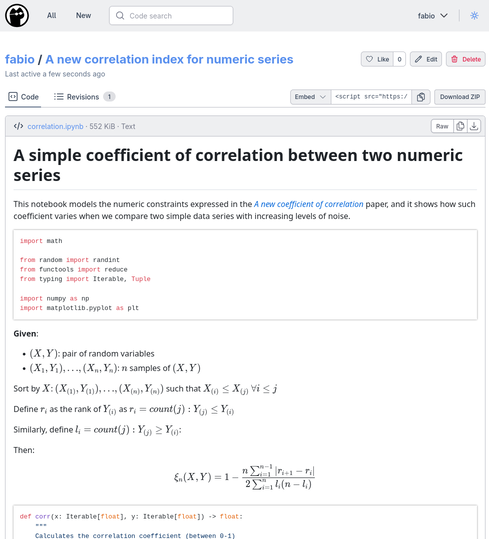

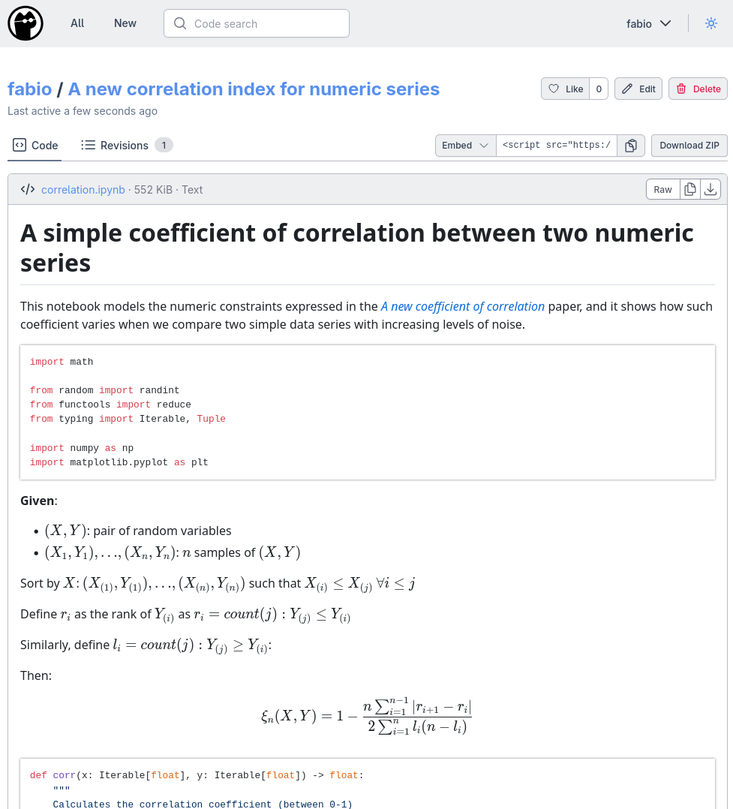

Track your location without giving up on your privacy

The Google Maps Timeline appeal

I used to love the Google Maps Timeline when it came out some years ago. A permanent storage of your location data is a very nice addition to anyone's digital toolbox. It gives you the ability to immediately recall when you went on a holiday a few years ago, or where was that club that hosted a cool party in Berlin last summer, or track your lost phone before it runs out of battery, or even run more advanced statistics – like get the countries you've visited more often over the past 5 years, or the longest journey you've taken over a certain time span.

Unfortunately, the trade-off is giving up your precious location data to a company with a very poor record of user privacy, and which employs very shady practices to get their hands on your location all the time.

Additionally, Google is a company with a poor record of consistency when it comes to maintaining their own products on the long-term – and the Maps Timeline seems to be on the list of products it's going to axe with very short notice.

Foursquare nostalgia

An alternative I've been using for a long time is Swarm by Foursquare. Many of my generation remember a time in the 2010s when Foursquare was very popular among people in their 20s and 30s. It was like Facebook, but for location sharing. It was the place to go when you were looking for recommendations in a certain area, or for genuine user reviews, and you could “check-in” at places and build your own timelines. It also has nice features such as geo heatmaps to show all the places you've been, as well as statistics by country, region etc.

It was so popular that many venues offered discounts to their Foursquare “mayors“ (the jargon for the user with most recent check-ins at a certain place).

The gamification and social aspects were also quite strong, with mayorships, streaks, shared check-ins and leaderboards all guaranteeing its addictive success for some time.

Nowadays the Foursquare app has been separated from its social check-in features (moved to Swarm), and it's definitely way less popular than it used to be until 10 years ago (I still open it sometimes, but out of my list of ~200 friends there are only 2 that still post semi-regular updates).

Also, it requires you to manually check-in to registered venues in order to build your timeline. The alternative is to give it background access to your location, so it fetches all potential venues as you walk around them and when you open the app again you can check-in to them. But it's of course a big no-no for privacy. Plus, the location service and stats are only available in the mobile app, and limited to what the app provides, without mechanisms to pull all of your location history in CSV, GPX or any other format that you can easily import in another service.

Building the dataset

So over the past few years I've resorted to building my own self-hosted ingestion pipeline for my location data. I wrote an article in 2019 showing how I set it up using Tasker on my Android phone to periodically run an AutoLocation task that fetches my exact GPS location, pushes it over my MQTT broker through the MQTT Client app (currently unmaintained, but it has a nice Tasker integration), and uses Platypush on the other side to listen to the events on the MQTT topic and store them to my database.

I have been using this set up for more than 10 years, and collected half a million of data points from my devices, usually with a granularity of 10 minutes.

The set up I covered in my blog article is still, with a few variations, the one I use today to collect and store my location data on my own digital infrastructure. The blog article shows how to share location messages with the Platypush service over Pushbullet, using the Platypush pushbullet plugin, but it's easy to adapt to use other interfaces – MQTT, as briefly discussed above, in tandem with the Platypush mqtt plugin, or even HTTP using the REST plugin.

The main addition I've made to the event hook on the Platypush side is an intermediate call to the google.maps Platypush plugin to fetch the nearest address to the selected latitude/longitude/altitude data, so the full code now looks like this:

import logging

from datetime import datetime

from platypush.event.hook import hook

from platypush.message.event.geo import LatLongUpdateEvent

from platypush.utils import run

logger = logging.getLogger(__name__)

# It should be linked to a device ID registered on GPSTracker

default_device_id = "DEVICE_ID"

@hook(LatLongUpdateEvent)

def log_location_data(event: LatLongUpdateEvent, **_):

device_id = getattr(event, "device_id", default_device_id)

try:

address = run(

"google.maps.get_address_from_latlng", event.latitude, event.longitude

)

except Exception as e:

logger.warning("Error while retrieving the address from the lat/long: %s", e)

address = {}

run(

"db.insert",

engine="postgresql+psycopg2://gpstracker@mydb/gpstracker",

table="location_history",

records=[

{

"deviceId": device_id,

"latitude": event.latitude,

"longitude": event.longitude,

"altitude": event.altitude,

"address": address.get("address"),

"locality": address.get("locality"),

"country": address.get("country"),

"postalCode": address.get("postal_code"),

"timestamp": datetime.fromtimestamp(event.timestamp),

}

],

)

Having all of your location data stored on a relational databases turns most of the location analytics problems into simple SQL queries. Like “which countries have I visited the most?“:

SELECT country, COUNT(*) AS n_points

FROM location_history

GROUP BY country

ORDER BY n_points DESC

The frontend problem

I have tried out several frontends to display my location data over the years.

My favourite for a while has been the TrackMap plugin for Grafana. But with Grafana deprecating Angular plugins, and many Angular plugins not being updated in a while, this plugin is at risk of becoming unusable soon. Plus, it doesn't provide many features – just a map of the points over a certain time span, without the ability to expand them or scroll them against a timeline.

Another alternative I've used is the PhoneTrack extension for Nextcloud. It works as long as you push your data to its tables – just look at the oc_phonetrack_points table it creates on your Nextcloud db to get an idea of the structure.

However, that app also doesn't provide many features besides showing your data points on a map. And it frequently breaks when new versions of Nextcloud are released.

So in the past few weeks I've decided to roll up my sleeves and build such frontend myself.

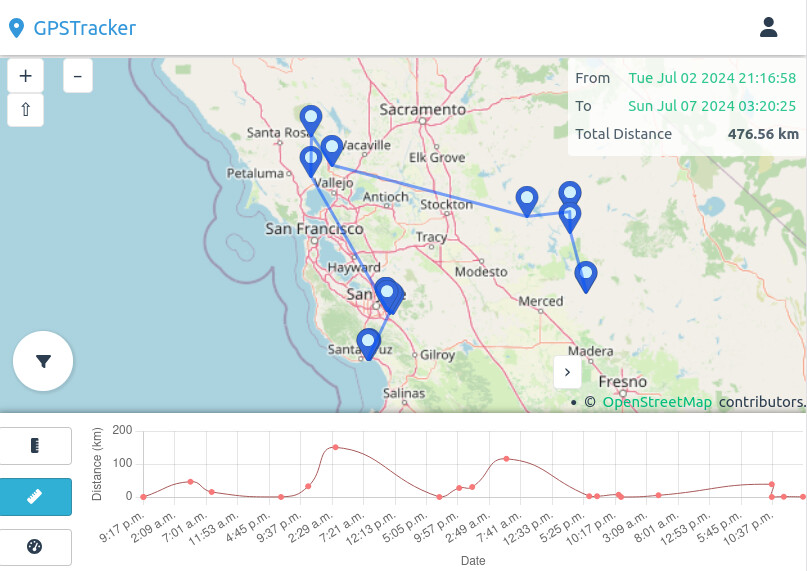

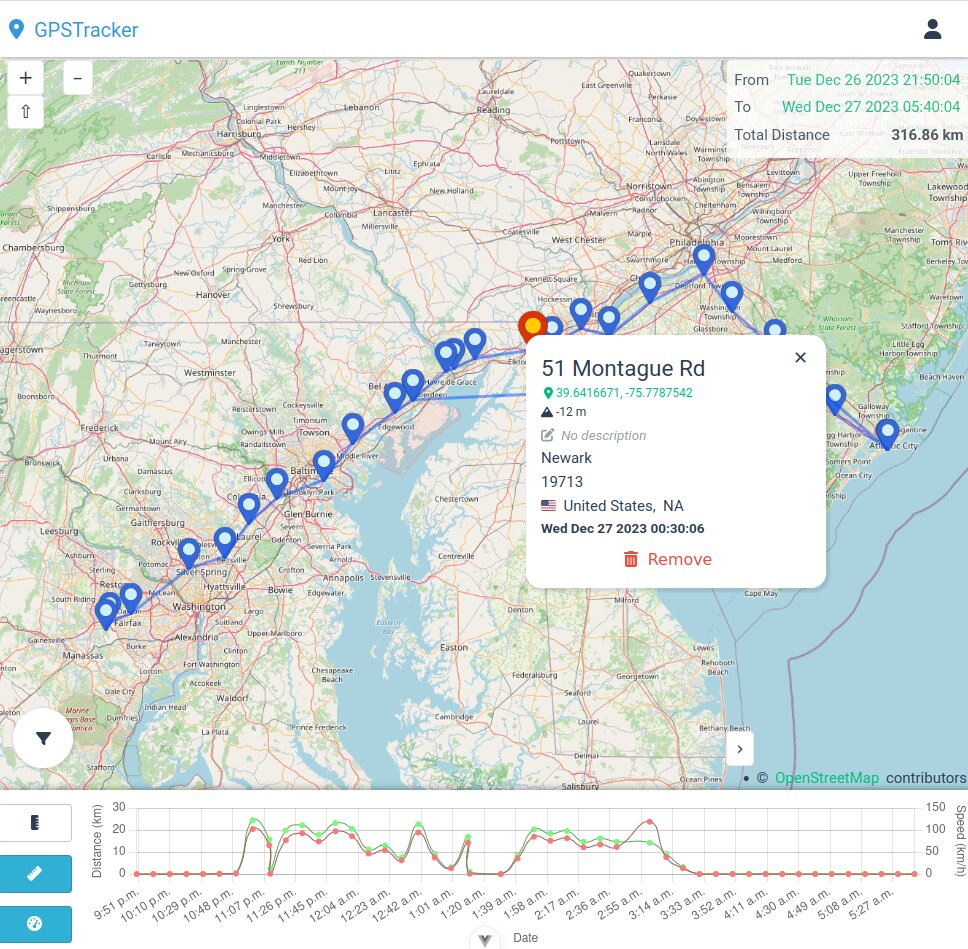

The result is GPSTracker (here for the Github mirror), a Webapp inspired by Google Maps Timeline that makes it easy to navigate your location data, safely stored on your own digital infrastructure.

Clone the project on your local machine (an npmjs.com release and a pre-built Docker image are WIP):

git clone https://git.platypush.tech/blacklight/gpstracker

cd gpstracker

Copy the .env.example file to .env and set the required entries. You have to explicitly set at least the following:

DB_URL (the provided default points to the Postgres database that runs via docker-compose, change it if you want to use another db). Currently tested with postgres and sqlite, but any data source compatible with Sequelize should be supported, provided that you npm install the appropriate driver.

ADMIN_EMAIL / ADMIN_PASSWORD

SERVER_KEY (generate it via openssl rand -base64 32)

External data sources

If you already have your location data stored somewhere else, you can opt to specify a separate LOCATION_DB_URL (as well as LOCATION_DB_TABLE) in your environment configuration. In that case, instead of using the location_history table on the application database, data will be fetched from your specified data source.

This comes with two caveats:

The external table must have a deviceId column (or a custom column with the mapping specified via DB_LOCATION__DEVICE_ID variable) that contains valid devices registered on the application.

If a device changes ownership or it's deleted, the data won't change on the remote side.

Docker build

docker compose up

This will build a container with the Web application from the source directory and create a Postgres container to store your db (make sure to back it up regularly!)

Local build

Requirements:

make

You can then run the application via:

npm run start

Initial set up

Once the application is running, open it in your favourite Web browser (by default it will listen on http://localhost:3000).

Enter the credentials you specified in ADMIN_EMAIL / ADMIN_PASSWORD to log in. You can then proceed to create a new device (top right menu –> Devices). Take note of the unique UUID assigned to it. You may also want to create an API key (top right menu –> API) if you want to ingest data over the provided POST API.

Ingestion

The content of my previous article that used Tasker+Platypush to forward and store location data also applies to GPSTracker. You can either use your existing database via LOCATION_DB_URL, or configure the Platypush hook to write directly into the application database.

A more user-friendly alternative however may involve the GPSLogger Android app.

It already provides a mechanism to periodically fetch location updates and push them to your favourite service.

Select Custom URL from the settings menu, and insert the URL of your GPSTracker service.

Under the HTTP Headers section, add Authorization: Bearer <YOUR-KEY>, where <YOUR_KEY> is the API key you generated in GPSTracker.

Under HTTP Body, add the following:

[{

"deviceId": "YOUR-DEVICE-ID",

"latitude": %LAT,

"longitude": %LON,

"altitude": %ALT,

"description": %DESC,

"timestamp": %TIME

}]

Set URL to http(s)://your-gpstracker-hostname/api/v1/gpsdata and HTTP Method to POST.

A full curl equivalent may look like this:

curl -XPOST \

-H "Authorization: Bearer your-api-token" \

-H "Content-Type: application/json"

-d '[{

"deviceId": "your-device-id",

"latitude": 40.7128,

"longitude": -74.0060,

"address": "260 Broadway",

"locality": "New York, NY",

"country": "us",

"postalCode": "10007",

"description": "New York City Hall",

"timestamp": "2021-01-01T00:00:00Z"

}]' http://localhost:3000/api/v1/gpsdata

Then hit _Start Logging` whenever you want to share your data, or keep it always running in the background, and you should see your UI being populated soon with your GPS points.

The UI

The UI uses OpenStreetMap to display location data.

It also provides a timeline in at the bottom of the page, with information such as altitude, travelled straight-line distance between points and estimated speed available on the graph. Scrolling on the timeline will show you the nearest point where you were around that time.

Coming up

This application is still in its infancy state, and many more features are coming up. Among these:

Ability to import GPX/CSV data (but the POST /api/v1/gpsdata endpoint currently provides a comparable alternative).

Ability to share sessions with other users – e.g. to share travel itineraries or temporary real-time tracking.

More stats – e.g. the countries/regions where you've been, in a nice zoomable heatmap or table format.

Track more metrics – e.g. battery level and state, GPS accuracy and (actual) recorded speed.